对docker容器大规模编排 管理 伸缩 部署

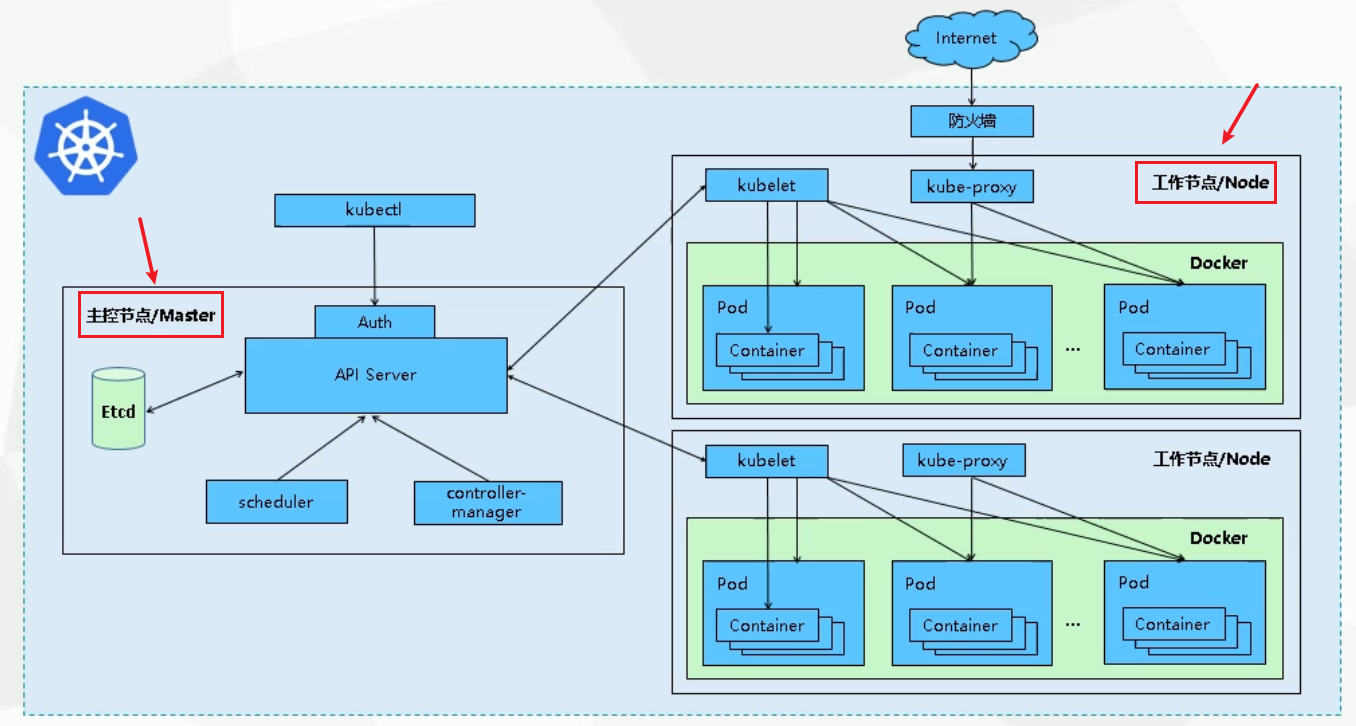

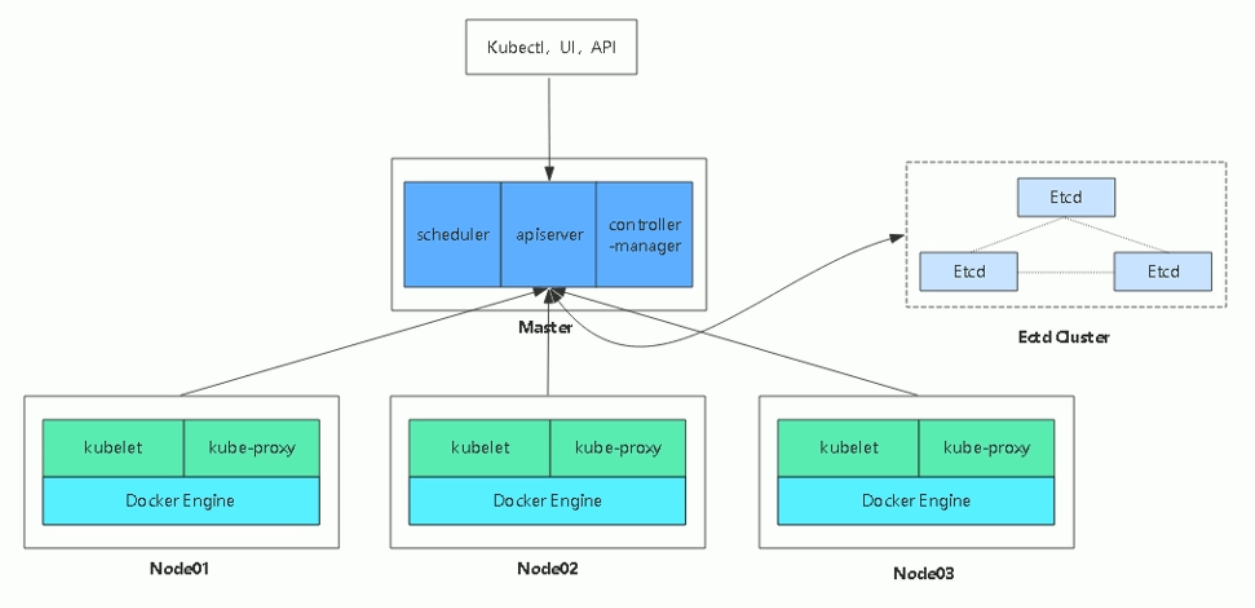

架构组件图

master组件

kube-apiserver Kubernetes API #集群的统一入口,各组件协调者,以RESTful API提供接口服务,所有对象资源的增删改查和监听操作都交给APIServer处理后再提交给Etcd存储。kube-controller-manager #处理集群中常规后台任务,一个资源对应一个控制器,而ControllerManager就是负责管理这些控制器的。kube-scheduler #根据调度算法为新创建的Pod选择一个Node节点,可以任意部署,可以部署在同一个节点上也可以部署在不同的节点上。etcd #分布式键值存储系统。用于保存集群状态数据,比如Pod、Service等对象信息。

node组件

kubelet #kubelet是Master在Node节点上的Agent,管理本机运行容器的生命周期,比如创建容器、Pod挂载数据卷、下载secret、.获取容器和节点状态等工作。kubelet将每个Pod转换成一组容器。kube-proxy #在Node节点上实现Pod网络代理,维护网络规则和四层负载均衡工作。docker或rocket #容器引擎,运行容器。

核心概念

pod

最小部署单元一组容器的集合一个Pod中的容器共享网络命名空间Pod是短暂的

controllers

ReplicaSet:确保预期的Pod副本数量Deployment:无状态应用部署StatefulSet:有状态应用部署DaemonSet:确保所有Node运行同一个PodJob:一次性任务Cronjob:定时任务

service

防止Pod失联定义一组Pod的访问策略

label

标签,附加到某个资源上,用于关联对象、查询和筛选

namespace

命名空间,将对象逻辑上隔离

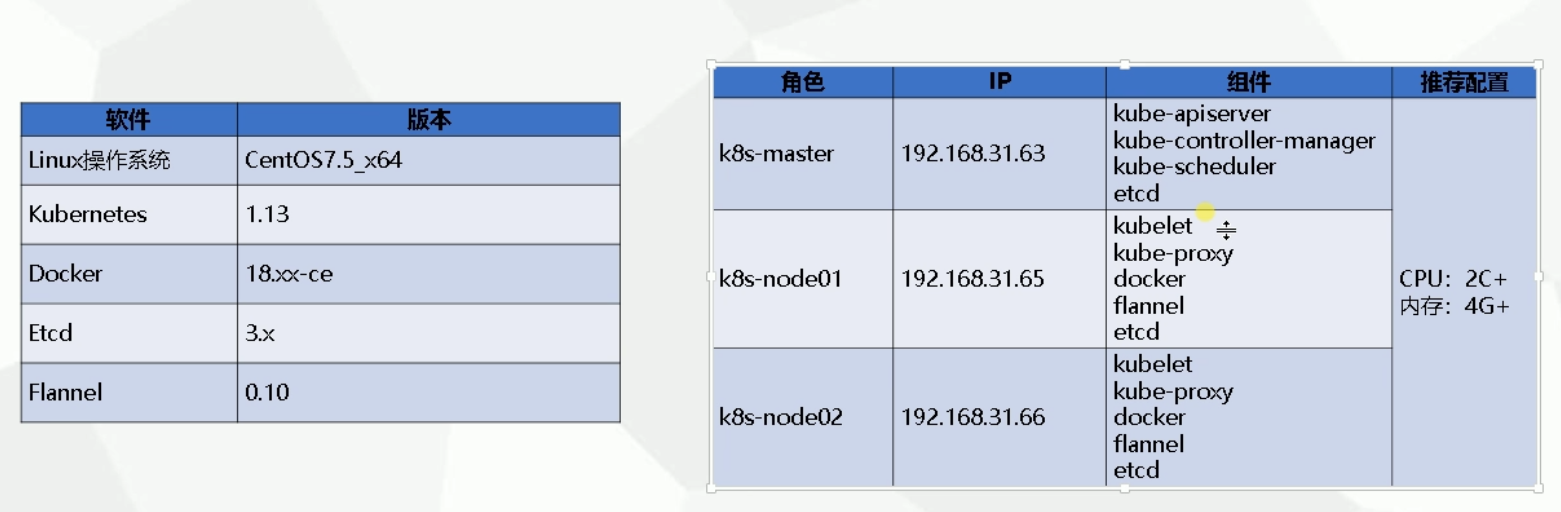

二进制部署k8s集群

集群规划

一、部署etcd数据库集群

准备工作

关闭selinux关闭防火墙

生成自签ssl证书

#上传cfssl.sh和etch-cret.sh#三台机器都做同步时间ntpdate time.windows.com#执行cfssl脚本,下载安装工具,下载不下来的用我下载完打包的cfssl.zip上传解压,然后按脚本完成后面操作#执行etcd-cert.sh,生成证书

配置etcd集群数据库

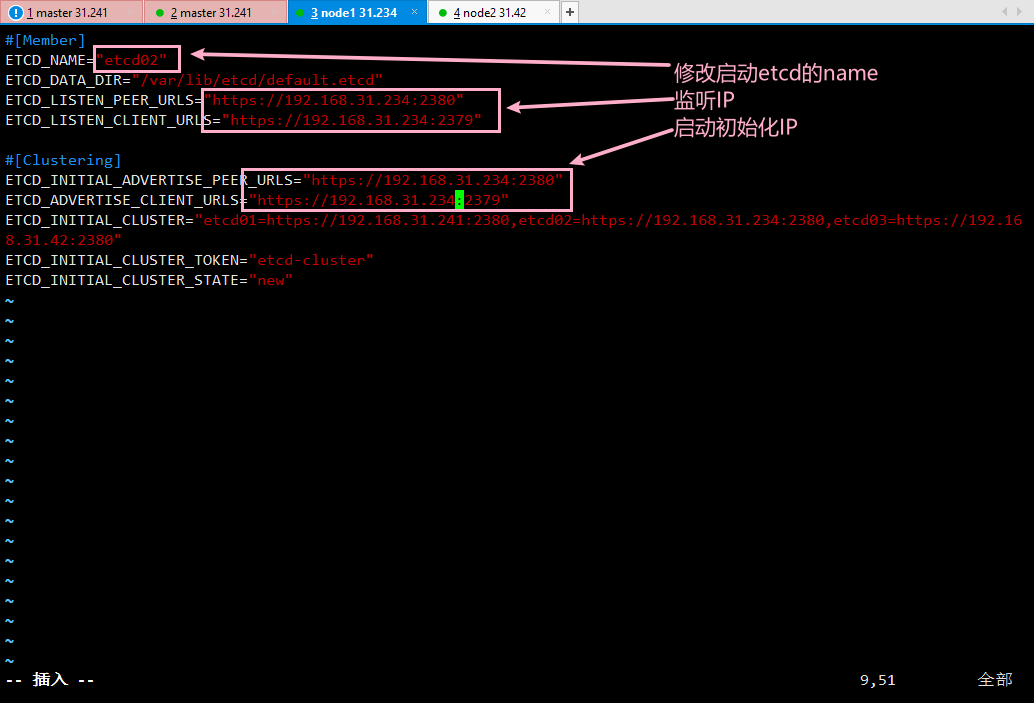

#上传etcd-v3.3.10-linux-amd64.tar.gz并解压安装其中目录里面:etcd是启动文件,etcdctl是管理客户端文件#创建相应的目录方便管理mkdir -p /opt/etcd/{cfg,bin,ssl}#移动启动文件到相应的目录mv etcd etcdctl /opt/etcd/bin#上传etcd.sh的部署脚本,修改参数执行./etcd.sh etcd01 192.168.31.241 etcd02=https://192.168.31.42:2380,etcd03=https://192.168.31.43:2380#因为证书没有拷贝所以启动会报错我们拷贝证书后重新启动cp /k8s/etcd-cert/{ca,server,server-key}.pem /opt/etcd/ssl/systemctl start etcdps:卡启动命令是因为另外两个节点没有加入 可以tail -f /var/log/messages 看启动情况#拷贝etcd配置和service服务到另外两台scp -r /opt/etcd/ root@192.168.31.42:/opt/scp -r /opt/etcd/ root@192.168.31.43:/opt/scp /usr/lib/systemd/system/etcd.service root@192.168.31.42:/usr/lib/systemd/system/scp /usr/lib/systemd/system/etcd.service root@192.168.31.43:/usr/lib/systemd/system/#修改另外两台etcd的配置文件,如下图,然后启动vim /opt/etcd/cfg/etcdsystemctl daemon-reload && systemctl start etcd#master查看集群状态/opt/etcd/bin/etcdctl --ca-file=/opt/etcd/ssl/ca.pem --cert-file=/opt/etcd/ssl/server.pem --key-file=/opt/etcd/ssl/server-key.pem --endpoints="https://192.168.31.241:2379,https://192.168.31.42:2379,https://192.168.31.43:2379" cluster-healthps:节点信息有误的话删除etcd的工作目录数据/var/lib/etcd/default.etcd/member/ 修改证书和etcd配置文件 然后重载配置文件并重启etcd即可

二、node 节点安装docker

#安装依赖yum install -y yum-utils device-mapper-persistent-data lvm2#添加yum源yum-config-manager --add-repo http://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo#安装docker-ce社区版yum -y install docker-ceps:指定版本安装列出版本:yum list docker-ce.x86_64 --showduplicates | sort -r指定安装:yum -y install docker-ce-[VERSION] 指定具体的docker-ce的版本#配置道客仓库加速器curl -sSL https://get.daocloud.io/daotools/set_mirror.sh | sh -s http://f1361db2.m.daocloud.io#启动dockersystemctl restart docker

三、CNI容器网络部署(Flannel)

master节点建立子网

#创建172.16.0.0/16子网,配置模式为vxlan/opt/etcd/bin/etcdctl --ca-file=/opt/etcd/ssl/ca.pem --cert-file=/opt/etcd/ssl/server.pem --key-file=/opt/etcd/ssl/server-key.pem --endpoints="https://192.168.31.241:2379,https://192.168.31.42:2379,https://192.168.31.43:2379" set /coreos.com/network/config '{"Network":"172.16.0.0/16","Backend":{"Type":"vxlan"}}'

node节点部署

#上传flannel-v0.10.0-linux-amd64.tar.gz包,并解压到k8s工作bin路径下tar -zxvf flannel-v0.10.0-linux-amd64.tar.gz -C /opt/kubernetes/bin/#上传flannel.sh脚本,手动创建对应的目录,并执行脚本(跟上etcd的member地址)mkdir -p /opt/kubernetes/{bin,ssl,cfg}./flannel.sh https://192.168.31.241:2379,https://192.168.31.42:2379,https://192.168.31.43:2379PS:报错“failed to retrieve network config: 100: Key not found (/coreos.com)”原因:etcd-v3.4.3中,虽然开启了兼容模式,但v2/v3命令保存的数据是不互通的解决:master创建子网命令的时候指定是V2版本的set,例:ETCDCTL_API=2 /opt/etcd/bin/etcdctl .....#重启docker,让其容器走flannel配置的IP

四、安装k8s

master主节点配置

kube-apiserver

kube-controller-manager

kube-scheduler

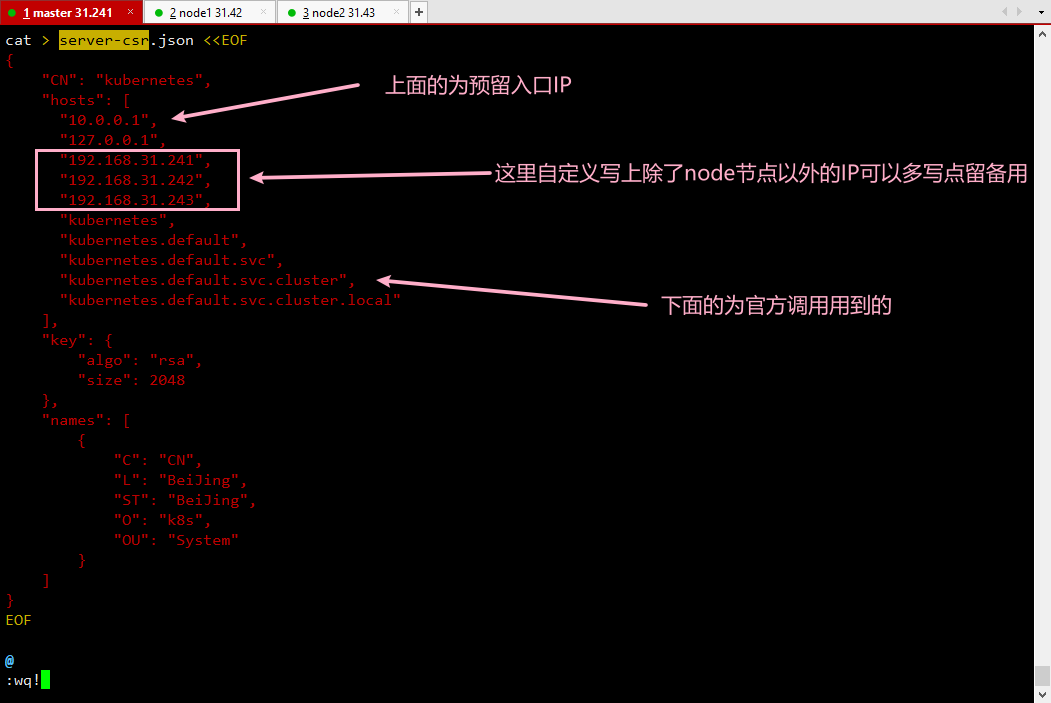

#上传kubernetes-server-linux-amd64.tar.gz解压将kubernetes/server/bin下的三个文件移动到kubernetes自定义主目录mkdir -p /opt/kubernetes/{bin,cfg,ssl}cp kube-apiserver kube-controller-manager kube-scheduler kubectl /opt/kubernetes/bin/#上传master.zip,解压,并启动apiserver./apiserver.sh 192.168.31.241 https://192.168.31.241:2379,https://192.168.31.42:2379,https://192.168.31.43:2379#创建自定义存放kubernetes存放的日志目录mkdir -p /opt/kubernetes/logs#修改apiserver主配置文件vim /opt/kubernetes/cfg/kube-apiserver第一行改为:KUBE_APISERVER_OPTS="--logtostderr=false \第二行加入:--log-dir=/opt/kubernetes/logs \#上传k8s-cert.sh 生成所需的kubernetes证书修改server-csr.json导入的内容,如下图./k8s-cert.sh#将所需的证书放到我们自定义的kubernetes主目录ssl下cp ca.pem kube-proxy.pem server.pem server-key.pem ca-key.pem /opt/kubernetes/ssl/#上传k8s-cert.sh 生成所需的token.csv文件cat > /opt/kubernetes/cfg/token.csv <<EOF0fb61c46f8991b718eb38d27b605b008,kubelet-bootstrap,10001,"system:kubelet-bootstrap"EOF#创建上面生成token.csv所需的用户并绑定到系统集群角色./kubectl create clusterrolebinding kubelet-bootstrap \--clusterrole=system:node-bootstrapper \--user=kubelet-bootstrap#PS:删除./kubectl delete clusterrolebinding kubelet-bootstrap#进到解压的master.zip下 生成所需的controller-manager和scheduler./controller-manager.sh 127.0.0.1./scheduler.sh 127.0.0.1ps:这两个组件只在内部通信,默认监听8080端口#查看集群状态./kubectl get cs #检查集群状态#上传kubeconfig.sh 将5-7行生成csv的代码删掉 生成bootstrap和kube-proxy的配置文件./kubeconfig.sh 192.168.31.241 /opt/kubernetes/ssl#拷贝到另外两台node节点scp bootstrap.kubeconfig kube-proxy.kubeconfig root@192.168.31.42:/opt/kubernetes/cfg/scp bootstrap.kubeconfig kube-proxy.kubeconfig root@192.168.31.43:/opt/kubernetes/cfg/

node组件配置

#从master机器拷贝启动文件scp kubelet kube-proxy root@192.168.31.42:/opt/kubernetes/bin/scp kubelet kube-proxy root@192.168.31.43:/opt/kubernetes/bin/#将node.zip 上传到node服务器,解压并启动kubelet./kubelet.sh 192.168.31.42#在master节点授予允许证书权限#启动proxy./proxy.sh 192.168.31.42#另一台机器一样的操作

master主节点常用命令

./kubectl get csr #查询已发起请求的csr信息./kubectl certificate approve [查询到的name值] #允许该name证书通过认证./kubectl get node #查看节点信息

Kubeadm部署集群

前置条件

#关闭swap 不关会降低性能临时关闭:swapoff -a永久关闭:vim /etc/fstab#关闭防火墙systemctl stop firewalld && systemctl disable firewalld#关闭selinuxsed -i 's/enforcing/disabled/' /etc/selinux/config && setenforce 0 && getenforce#添加对应主机host(可不做)cat <<EOF >> /etc/hosts192.168.31.65 master192.168.31.66 node1192.168.31.67 node2EOF#将桥接的IPV4流量传递到iptables链路(增加网络组件兼容性)cat > /etc/sysctl.d/k8s.conf <<EOFnet.bridge.bridge-nf-call-ip6tables=1net.bridge.bridge-nf-call-iptables=1EOFsysctl --system#安装dockerwget https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo -O /etc/yum.repos.d/docker-ce.repoyum -y install docker-ce-18.06.1.ce-3.el7systemctl start docker && systemctl enable dockerdocker --version

安装kubeadmn、kubelet和kebectl

#配置仓库源cat > /etc/yum.repos.d/kubernetes.repo <<EOF[kubernetes]name=Kubernetesbaseurl=http://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64enabled=1gpgcheck=0repo_gpgcheck=0gpgkey=http://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpghttp://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpgEOF#安装组件yum -y install kubelet-1.15.0 kubeadm-1.15.0 kubectl-1.15.0systemctl start kubelet && systemctl enable kubelet

master节点

#初始化并更改拉取镜像的地址kubeadm init \--apiserver-advertise-address=192.168.31.65 \--image-repository registry.aliyuncs.com/google_containers \--kubernetes-version v1.15.0 \--service-cidr=10.1.0.0/16 \--pod-network-cidr=10.244.0.0/16Ps:初始化完成以后注意看怎么让node节点加入的命令然后复制到node节点执行#创建kubectl工具mkdir -p $HOME/.kubesudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/configsudo chown $(id -u):$(id -g) $HOME/.kube/configPs测试:kubectl get nodes#通过yml对资源进行配置kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/a70459be0084506e4ec919aa1c114638878db11b/Documentation/kube-flannel.ymlPs:也可以参考下方代码块yml配置

---kind: ClusterRoleapiVersion: rbac.authorization.k8s.io/v1beta1metadata:name: flannelrules:- apiGroups:- ""resources:- podsverbs:- get- apiGroups:- ""resources:- nodesverbs:- list- watch- apiGroups:- ""resources:- nodes/statusverbs:- patch---kind: ClusterRoleBindingapiVersion: rbac.authorization.k8s.io/v1beta1metadata:name: flannelroleRef:apiGroup: rbac.authorization.k8s.iokind: ClusterRolename: flannelsubjects:- kind: ServiceAccountname: flannelnamespace: kube-system---apiVersion: v1kind: ServiceAccountmetadata:name: flannelnamespace: kube-system---kind: ConfigMapapiVersion: v1metadata:name: kube-flannel-cfgnamespace: kube-systemlabels:tier: nodeapp: flanneldata:cni-conf.json: |{"name": "cbr0","plugins": [{"type": "flannel","delegate": {"hairpinMode": true,"isDefaultGateway": true}},{"type": "portmap","capabilities": {"portMappings": true}}]}net-conf.json: |{"Network": "10.244.0.0/16","Backend": {"Type": "vxlan"}}---apiVersion: extensions/v1beta1kind: DaemonSetmetadata:name: kube-flannel-ds-amd64namespace: kube-systemlabels:tier: nodeapp: flannelspec:template:metadata:labels:tier: nodeapp: flannelspec:hostNetwork: truenodeSelector:beta.kubernetes.io/arch: amd64tolerations:- operator: Existseffect: NoScheduleserviceAccountName: flannelinitContainers:- name: install-cniimage: quay.io/coreos/flannel:v0.11.0-amd64command:- cpargs:- -f- /etc/kube-flannel/cni-conf.json- /etc/cni/net.d/10-flannel.conflistvolumeMounts:- name: cnimountPath: /etc/cni/net.d- name: flannel-cfgmountPath: /etc/kube-flannel/containers:- name: kube-flannelimage: quay.io/coreos/flannel:v0.11.0-amd64command:- /opt/bin/flanneldargs:- --ip-masq- --kube-subnet-mgrresources:requests:cpu: "100m"memory: "50Mi"limits:cpu: "100m"memory: "50Mi"securityContext:privileged: trueenv:- name: POD_NAMEvalueFrom:fieldRef:fieldPath: metadata.name- name: POD_NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespacevolumeMounts:- name: runmountPath: /run- name: flannel-cfgmountPath: /etc/kube-flannel/volumes:- name: runhostPath:path: /run- name: cnihostPath:path: /etc/cni/net.d- name: flannel-cfgconfigMap:name: kube-flannel-cfg---apiVersion: extensions/v1beta1kind: DaemonSetmetadata:name: kube-flannel-ds-arm64namespace: kube-systemlabels:tier: nodeapp: flannelspec:template:metadata:labels:tier: nodeapp: flannelspec:hostNetwork: truenodeSelector:beta.kubernetes.io/arch: arm64tolerations:- operator: Existseffect: NoScheduleserviceAccountName: flannelinitContainers:- name: install-cniimage: quay.io/coreos/flannel:v0.11.0-arm64command:- cpargs:- -f- /etc/kube-flannel/cni-conf.json- /etc/cni/net.d/10-flannel.conflistvolumeMounts:- name: cnimountPath: /etc/cni/net.d- name: flannel-cfgmountPath: /etc/kube-flannel/containers:- name: kube-flannelimage: quay.io/coreos/flannel:v0.11.0-arm64command:- /opt/bin/flanneldargs:- --ip-masq- --kube-subnet-mgrresources:requests:cpu: "100m"memory: "50Mi"limits:cpu: "100m"memory: "50Mi"securityContext:privileged: trueenv:- name: POD_NAMEvalueFrom:fieldRef:fieldPath: metadata.name- name: POD_NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespacevolumeMounts:- name: runmountPath: /run- name: flannel-cfgmountPath: /etc/kube-flannel/volumes:- name: runhostPath:path: /run- name: cnihostPath:path: /etc/cni/net.d- name: flannel-cfgconfigMap:name: kube-flannel-cfg---apiVersion: extensions/v1beta1kind: DaemonSetmetadata:name: kube-flannel-ds-armnamespace: kube-systemlabels:tier: nodeapp: flannelspec:template:metadata:labels:tier: nodeapp: flannelspec:hostNetwork: truenodeSelector:beta.kubernetes.io/arch: armtolerations:- operator: Existseffect: NoScheduleserviceAccountName: flannelinitContainers:- name: install-cniimage: quay.io/coreos/flannel:v0.11.0-armcommand:- cpargs:- -f- /etc/kube-flannel/cni-conf.json- /etc/cni/net.d/10-flannel.conflistvolumeMounts:- name: cnimountPath: /etc/cni/net.d- name: flannel-cfgmountPath: /etc/kube-flannel/containers:- name: kube-flannelimage: quay.io/coreos/flannel:v0.11.0-armcommand:- /opt/bin/flanneldargs:- --ip-masq- --kube-subnet-mgrresources:requests:cpu: "100m"memory: "50Mi"limits:cpu: "100m"memory: "50Mi"securityContext:privileged: trueenv:- name: POD_NAMEvalueFrom:fieldRef:fieldPath: metadata.name- name: POD_NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespacevolumeMounts:- name: runmountPath: /run- name: flannel-cfgmountPath: /etc/kube-flannel/volumes:- name: runhostPath:path: /run- name: cnihostPath:path: /etc/cni/net.d- name: flannel-cfgconfigMap:name: kube-flannel-cfg---apiVersion: extensions/v1beta1kind: DaemonSetmetadata:name: kube-flannel-ds-ppc64lenamespace: kube-systemlabels:tier: nodeapp: flannelspec:template:metadata:labels:tier: nodeapp: flannelspec:hostNetwork: truenodeSelector:beta.kubernetes.io/arch: ppc64letolerations:- operator: Existseffect: NoScheduleserviceAccountName: flannelinitContainers:- name: install-cniimage: quay.io/coreos/flannel:v0.11.0-ppc64lecommand:- cpargs:- -f- /etc/kube-flannel/cni-conf.json- /etc/cni/net.d/10-flannel.conflistvolumeMounts:- name: cnimountPath: /etc/cni/net.d- name: flannel-cfgmountPath: /etc/kube-flannel/containers:- name: kube-flannelimage: quay.io/coreos/flannel:v0.11.0-ppc64lecommand:- /opt/bin/flanneldargs:- --ip-masq- --kube-subnet-mgrresources:requests:cpu: "100m"memory: "50Mi"limits:cpu: "100m"memory: "50Mi"securityContext:privileged: trueenv:- name: POD_NAMEvalueFrom:fieldRef:fieldPath: metadata.name- name: POD_NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespacevolumeMounts:- name: runmountPath: /run- name: flannel-cfgmountPath: /etc/kube-flannel/volumes:- name: runhostPath:path: /run- name: cnihostPath:path: /etc/cni/net.d- name: flannel-cfgconfigMap:name: kube-flannel-cfg---apiVersion: extensions/v1beta1kind: DaemonSetmetadata:name: kube-flannel-ds-s390xnamespace: kube-systemlabels:tier: nodeapp: flannelspec:template:metadata:labels:tier: nodeapp: flannelspec:hostNetwork: truenodeSelector:beta.kubernetes.io/arch: s390xtolerations:- operator: Existseffect: NoScheduleserviceAccountName: flannelinitContainers:- name: install-cniimage: quay.io/coreos/flannel:v0.11.0-s390xcommand:- cpargs:- -f- /etc/kube-flannel/cni-conf.json- /etc/cni/net.d/10-flannel.conflistvolumeMounts:- name: cnimountPath: /etc/cni/net.d- name: flannel-cfgmountPath: /etc/kube-flannel/containers:- name: kube-flannelimage: quay.io/coreos/flannel:v0.11.0-s390xcommand:- /opt/bin/flanneldargs:- --ip-masq- --kube-subnet-mgrresources:requests:cpu: "100m"memory: "50Mi"limits:cpu: "100m"memory: "50Mi"securityContext:privileged: trueenv:- name: POD_NAMEvalueFrom:fieldRef:fieldPath: metadata.name- name: POD_NAMESPACEvalueFrom:fieldRef:fieldPath: metadata.namespacevolumeMounts:- name: runmountPath: /run- name: flannel-cfgmountPath: /etc/kube-flannel/volumes:- name: runhostPath:path: /run- name: cnihostPath:path: /etc/cni/net.d- name: flannel-cfgconfigMap:name: kube-flannel-cfg

node节点加入集群

kubeadm join 192.168.31.65:6443 --token nf1tni.1hast5a6ryozmo3i \--discovery-token-ca-cert-hash sha256:173d6ea628e97f25f6d8bc4dd2f3cecc30e9336fae04621dd15ca8160069e3d9

测试kubernetes集群

#集群新建pod任务为nginxkubectl create deployment nginx --image=nginxkubectl expose deployment nginx --port=80 --type=NodePort#查看集群下的pod任务详情 根据展示的nginx pod信息访问对应端口号网页测试kubectl get pod,svc

master部署Dashboard

#上传下方yaml配置文件到服务器,载入yaml配置kubectl apply -f ./kubernetes-dashboard.yaml#查看状态kubectl get pods -n kube-systemkubectl get pods,svc -n kube-system#浏览器访问测试(必须用360浏览器)https://192.168.31.66:30001/#创建一个面向应用的虚拟用户kubectl create serviceaccount dashboard-admin -n kube-systemkubectl create clusterrolebinding dashboard-admin --clusterrole=cluster-admin --serviceaccount=kube-system:dashboard-admin#获取虚拟用户 dashboard-admin 的对应令牌密钥,用于登录后台kubectl describe secrets -n kube-system $(kubectl -n kube-system get secret | awk '/dashboard-admin/{print $1}')#也可以官网下载yaml配置https://raw.githubusercontent.com/kubernetes/dashboard/v1.10.1/src/deploy/recommended/kubernetes-dashboard.yamlPs:上文修改的配置项有:type: NodePort #绑定外部端口nodePort: 30001 #创建外部端口开始的id号,默认是从30000开始image: lizhenliang/kubernetes-dashboard-amd64:v1.10.1 #修改镜像下载源地址防止默认的国外源出问题

yaml配置文件

# Copyright 2017 The Kubernetes Authors.## Licensed under the Apache License, Version 2.0 (the "License");# you may not use this file except in compliance with the License.# You may obtain a copy of the License at## http://www.apache.org/licenses/LICENSE-2.0## Unless required by applicable law or agreed to in writing, software# distributed under the License is distributed on an "AS IS" BASIS,# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.# See the License for the specific language governing permissions and# limitations under the License.# ------------------- Dashboard Secret ------------------- #apiVersion: v1kind: Secretmetadata:labels:k8s-app: kubernetes-dashboardname: kubernetes-dashboard-certsnamespace: kube-systemtype: Opaque---# ------------------- Dashboard Service Account ------------------- #apiVersion: v1kind: ServiceAccountmetadata:labels:k8s-app: kubernetes-dashboardname: kubernetes-dashboardnamespace: kube-system---# ------------------- Dashboard Role & Role Binding ------------------- #kind: RoleapiVersion: rbac.authorization.k8s.io/v1metadata:name: kubernetes-dashboard-minimalnamespace: kube-systemrules:# Allow Dashboard to create 'kubernetes-dashboard-key-holder' secret.- apiGroups: [""]resources: ["secrets"]verbs: ["create"]# Allow Dashboard to create 'kubernetes-dashboard-settings' config map.- apiGroups: [""]resources: ["configmaps"]verbs: ["create"]# Allow Dashboard to get, update and delete Dashboard exclusive secrets.- apiGroups: [""]resources: ["secrets"]resourceNames: ["kubernetes-dashboard-key-holder", "kubernetes-dashboard-certs"]verbs: ["get", "update", "delete"]# Allow Dashboard to get and update 'kubernetes-dashboard-settings' config map.- apiGroups: [""]resources: ["configmaps"]resourceNames: ["kubernetes-dashboard-settings"]verbs: ["get", "update"]# Allow Dashboard to get metrics from heapster.- apiGroups: [""]resources: ["services"]resourceNames: ["heapster"]verbs: ["proxy"]- apiGroups: [""]resources: ["services/proxy"]resourceNames: ["heapster", "http:heapster:", "https:heapster:"]verbs: ["get"]---apiVersion: rbac.authorization.k8s.io/v1kind: RoleBindingmetadata:name: kubernetes-dashboard-minimalnamespace: kube-systemroleRef:apiGroup: rbac.authorization.k8s.iokind: Rolename: kubernetes-dashboard-minimalsubjects:- kind: ServiceAccountname: kubernetes-dashboardnamespace: kube-system---# ------------------- Dashboard Deployment ------------------- #kind: DeploymentapiVersion: apps/v1metadata:labels:k8s-app: kubernetes-dashboardname: kubernetes-dashboardnamespace: kube-systemspec:replicas: 1revisionHistoryLimit: 10selector:matchLabels:k8s-app: kubernetes-dashboardtemplate:metadata:labels:k8s-app: kubernetes-dashboardspec:containers:- name: kubernetes-dashboardimage: lizhenliang/kubernetes-dashboard-amd64:v1.10.1ports:- containerPort: 8443protocol: TCPargs:- --auto-generate-certificates# Uncomment the following line to manually specify Kubernetes API server Host# If not specified, Dashboard will attempt to auto discover the API server and connect# to it. Uncomment only if the default does not work.# - --apiserver-host=http://my-address:portvolumeMounts:- name: kubernetes-dashboard-certsmountPath: /certs# Create on-disk volume to store exec logs- mountPath: /tmpname: tmp-volumelivenessProbe:httpGet:scheme: HTTPSpath: /port: 8443initialDelaySeconds: 30timeoutSeconds: 30volumes:- name: kubernetes-dashboard-certssecret:secretName: kubernetes-dashboard-certs- name: tmp-volumeemptyDir: {}serviceAccountName: kubernetes-dashboard# Comment the following tolerations if Dashboard must not be deployed on mastertolerations:- key: node-role.kubernetes.io/mastereffect: NoSchedule---# ------------------- Dashboard Service ------------------- #kind: ServiceapiVersion: v1metadata:labels:k8s-app: kubernetes-dashboardname: kubernetes-dashboardnamespace: kube-systemspec:type: NodePortports:- port: 443targetPort: 8443nodePort: 30001selector:k8s-app: kubernetes-dashboard