1.准备工作

因为Hive基于Hadoop,所以我们需要Hadoop的环境,具体Hadoop的环境如何搭建可以查看前几篇文章。要使用JDBC访问Hive,可能会出现权限问题,我们需要在Hadoop的配置文件core-site.xml中加入如下配置,赋予root访问权限

<property><name>hadoop.proxyuser.root.hosts</name><value>*</value></property><property><name>hadoop.proxyuser.root.groups</name><value>*</value></property>

此外,要使用JDBC则必须开启HiveServer2,可以通过下面的方式启动HiveServer2

hiverserver2 &hive --service hiveserver2

但我遇到了输出语句打印到控制台的问题,所以我这里用下面这个命令

nohup hiveserver2 >> /usr/local/hive/log/hiveserver2.log 2>&1 &

默认开启10000号端口

2.导入依赖、编写代码

因为Hive基于Hadoop的原因,我们这里用之前Hadoop与SpringBoot整合的项目来继续整合Hive

pom.xml

<?xml version="1.0" encoding="UTF-8"?><project xmlns="http://maven.apache.org/POM/4.0.0" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 https://maven.apache.org/xsd/maven-4.0.0.xsd"><modelVersion>4.0.0</modelVersion><parent><groupId>org.springframework.boot</groupId><artifactId>spring-boot-starter-parent</artifactId><version>2.7.0</version><relativePath/> <!-- lookup parent from repository --></parent><groupId>com.zym</groupId><artifactId>hadoop_springboot</artifactId><version>0.0.1-SNAPSHOT</version><name>hadoop_springboot</name><description>Demo project for Spring Boot</description><properties><java.version>1.8</java.version><hadoop.version>3.3.3</hadoop.version></properties><dependencies><dependency><groupId>org.springframework.boot</groupId><artifactId>spring-boot-starter-web</artifactId></dependency><!-- hadoop --><dependency><groupId>org.apache.hadoop</groupId><artifactId>hadoop-common</artifactId><version>${hadoop.version}</version></dependency><dependency><groupId>org.apache.hadoop</groupId><artifactId>hadoop-streaming</artifactId><version>${hadoop.version}</version></dependency><dependency><groupId>org.apache.hadoop</groupId><artifactId>hadoop-yarn-common</artifactId><version>${hadoop.version}</version><exclusions><exclusion><groupId>com.google.guava</groupId><artifactId>guava</artifactId></exclusion></exclusions></dependency><dependency><groupId>org.apache.hadoop</groupId><artifactId>hadoop-distcp</artifactId><version>${hadoop.version}</version><scope>provided</scope></dependency><dependency><groupId>org.apache.hadoop</groupId><artifactId>hadoop-mapreduce-client-core</artifactId><version>${hadoop.version}</version></dependency><dependency><groupId>org.apache.hadoop</groupId><artifactId>hadoop-hdfs</artifactId><version>${hadoop.version}</version></dependency><dependency><groupId>org.apache.hadoop</groupId><artifactId>hadoop-mapreduce-client-jobclient</artifactId><version>${hadoop.version}</version><scope>provided</scope></dependency><dependency><groupId>com.alibaba</groupId><artifactId>druid</artifactId><version>1.2.1</version></dependency><!-- hive依赖 --><dependency><groupId>org.apache.hive</groupId><artifactId>hive-jdbc</artifactId><version>2.3.0</version></dependency><!-- 中文分词器 --><dependency><groupId>cn.bestwu</groupId><artifactId>ik-analyzers</artifactId><version>5.1.0</version></dependency><dependency><groupId>org.projectlombok</groupId><artifactId>lombok</artifactId></dependency><dependency><groupId>com.alibaba</groupId><artifactId>fastjson</artifactId><version>1.2.46</version></dependency><!-- 此处的导入依赖与mapreduce有关 --><dependency><groupId>jdk.tools</groupId><artifactId>jdk.tools</artifactId><version>1.8</version><scope>system</scope><systemPath>${JAVA_HOME}/lib/tools.jar</systemPath></dependency><dependency><groupId>junit</groupId><artifactId>junit</artifactId><scope>test</scope></dependency><dependency><groupId>org.springframework.boot</groupId><artifactId>spring-boot-starter-test</artifactId><scope>test</scope></dependency><dependency><groupId>org.springframework.boot</groupId><artifactId>spring-boot-starter-jdbc</artifactId></dependency></dependencies><build><plugins><plugin><groupId>org.springframework.boot</groupId><artifactId>spring-boot-maven-plugin</artifactId></plugin></plugins></build></project>

application.yml

# tomcat thread = 200server:tomcat:max-threads: 1000# edit tomcat portport: 8900# session time 30session-timeout: 60spring:application:name: hadoopservlet:multipart:max-file-size: 50MBmax-request-size: 50MBdatasource:hive: #hive数据源url: jdbc:hive2://hadoop0:10000/testtype: com.alibaba.druid.pool.DruidDataSourceusername: hive1password: Newpassword2022!!!driver-class-name: org.apache.hive.jdbc.HiveDrivercommon-config: #连接池统一配置,应用到所有的数据源initialSize: 1minIdle: 1maxIdle: 5maxActive: 50maxWait: 10000timeBetweenEvictionRunsMillis: 10000minEvictableIdleTimeMillis: 300000validationQuery: select 'x'testWhileIdle: truetestOnBorrow: falsetestOnReturn: falsepoolPreparedStatements: truemaxOpenPreparedStatements: 20filters: stathdfs:path: hdfs://hadoop0:9000username: root

DataSourceCommonProperties.java

import lombok.AllArgsConstructor;import lombok.Data;import lombok.NoArgsConstructor;import org.springframework.boot.context.properties.ConfigurationProperties;import org.springframework.stereotype.Component;@Data@NoArgsConstructor@AllArgsConstructor@Component@ConfigurationProperties(prefix = "spring.datasource.common-config", ignoreUnknownFields = false)public class DataSourceCommonProperties {private int initialSize = 10;private int minIdle;private int maxIdle;private int maxActive;private int maxWait;private int timeBetweenEvictionRunsMillis;private int minEvictableIdleTimeMillis;private String validationQuery;private boolean testWhileIdle;private boolean testOnBorrow;private boolean testOnReturn;private boolean poolPreparedStatements;private int maxOpenPreparedStatements;private String filters;private String mapperLocations;private String typeAliasPackage;}

HiveDruidConfiguration.java

import com.alibaba.druid.pool.DruidDataSource;import lombok.extern.slf4j.Slf4j;import org.springframework.beans.factory.annotation.Autowired;import org.springframework.beans.factory.annotation.Qualifier;import org.springframework.boot.context.properties.EnableConfigurationProperties;import org.springframework.context.annotation.Bean;import org.springframework.context.annotation.Configuration;import javax.sql.DataSource;import java.sql.SQLException;@Slf4j@Configuration@EnableConfigurationProperties({HiveJdbcProperties.class, DataSourceCommonProperties.class})public class HiveDruidConfiguration {@Autowiredprivate HiveJdbcProperties hiveJdbcProperties;@Autowiredprivate DataSourceCommonProperties dataSourceCommonProperties;@Bean("hiveDruidDataSource") //新建bean实例@Qualifier("hiveDruidDataSource")//标识public DataSource dataSource(){DruidDataSource datasource = new DruidDataSource();//配置数据源属性datasource.setUrl(hiveJdbcProperties.getUrl());datasource.setUsername(hiveJdbcProperties.getUsername());datasource.setPassword(hiveJdbcProperties.getPassword());datasource.setDriverClassName(hiveJdbcProperties.getDriverClassName());//配置统一属性datasource.setInitialSize(dataSourceCommonProperties.getInitialSize());datasource.setMinIdle(dataSourceCommonProperties.getMinIdle());datasource.setMaxActive(dataSourceCommonProperties.getMaxActive());datasource.setMaxWait(dataSourceCommonProperties.getMaxWait());datasource.setTimeBetweenEvictionRunsMillis(dataSourceCommonProperties.getTimeBetweenEvictionRunsMillis());datasource.setMinEvictableIdleTimeMillis(dataSourceCommonProperties.getMinEvictableIdleTimeMillis());datasource.setValidationQuery(dataSourceCommonProperties.getValidationQuery());datasource.setTestWhileIdle(dataSourceCommonProperties.isTestWhileIdle());datasource.setTestOnBorrow(dataSourceCommonProperties.isTestOnBorrow());datasource.setTestOnReturn(dataSourceCommonProperties.isTestOnReturn());datasource.setPoolPreparedStatements(dataSourceCommonProperties.isPoolPreparedStatements());try {datasource.setFilters(dataSourceCommonProperties.getFilters());} catch (SQLException e) {log.error("Druid configuration initialization filter error.", e);}return datasource;}}

HiveJdbcConfiguration.java

import org.springframework.beans.factory.annotation.Qualifier;import org.springframework.context.annotation.Bean;import org.springframework.context.annotation.Configuration;import org.springframework.jdbc.core.JdbcTemplate;import javax.sql.DataSource;@Configurationpublic class HiveJdbcConfiguration {@Bean("hiveJdbcTemplate")@Qualifier("hiveJdbcTemplate")public JdbcTemplate jdbcTemplate(@Qualifier("hiveDruidDataSource") DataSource dataSource) {return new JdbcTemplate(dataSource);}}

HiveJdbcProperties.java

import lombok.AllArgsConstructor;import lombok.Data;import lombok.NoArgsConstructor;import org.springframework.boot.context.properties.ConfigurationProperties;import org.springframework.stereotype.Component;@Data@NoArgsConstructor@AllArgsConstructor@Component@ConfigurationProperties(prefix = "spring.datasource.hive", ignoreUnknownFields = false)public class HiveJdbcProperties {private String url;private String type;private String username;private String password;private String driverClassName;}

HiveController.java

import com.zym.result.Result;import com.zym.service.HiveService;import lombok.extern.slf4j.Slf4j;import org.springframework.beans.factory.annotation.Autowired;import org.springframework.web.bind.annotation.GetMapping;import org.springframework.web.bind.annotation.RequestMapping;import org.springframework.web.bind.annotation.RestController;@RestController@RequestMapping("/hadoop/hive")@Slf4jpublic class HiveController {@Autowiredprivate HiveService hiveService;@GetMapping("/listAllTables")public Result listAllTables(){return new Result("SUCCESS","获取所有表成功",hiveService.listAllTables());}@GetMapping("/selectFromTable")public Result selectFromTable(String tableName){return new Result("SUCCESS","获取表内容成功",hiveService.selectFromTable(tableName));}@GetMapping("/select")public Result select(String hql){return new Result("SUCCESS","获取内容",hiveService.select(hql));}}

HiveService.java

import java.util.List;public interface HiveService {Object select(String hql);List<String> listAllTables();List<String> describeTable(String tableName);List<String> selectFromTable(String tableName);}

HiveServiceImpl.java

import com.zym.service.HiveService;import lombok.extern.slf4j.Slf4j;import org.springframework.beans.factory.annotation.Autowired;import org.springframework.beans.factory.annotation.Qualifier;import org.springframework.jdbc.core.JdbcTemplate;import org.springframework.stereotype.Service;import org.springframework.util.StringUtils;import javax.sql.DataSource;import java.sql.ResultSet;import java.sql.SQLException;import java.sql.Statement;import java.util.ArrayList;import java.util.Collections;import java.util.List;@Slf4j@Servicepublic class HiveServiceImpl implements HiveService {@Autowired@Qualifier("hiveJdbcTemplate")private JdbcTemplate hiveJdbcTemplate;@Autowired@Qualifier("hiveDruidDataSource")private DataSource hiveDruidDataSource;@Overridepublic Object select(String hql) {return hiveJdbcTemplate.queryForList(hql);}@Overridepublic List<String> listAllTables() {List<String> result = new ArrayList<>();try {Statement statement = hiveDruidDataSource.getConnection().createStatement();String sql = "show tables";log.info("Running: " + sql);ResultSet resultSet = statement.executeQuery(sql);while (resultSet.next()) {result.add(resultSet.getString(1));}return result;} catch (SQLException throwables) {log.error(throwables.getMessage());}return Collections.emptyList();}@Overridepublic List<String> describeTable(String tableName) {if (StringUtils.isEmpty(tableName)){return Collections.emptyList();}List<String> result = new ArrayList<>();try {Statement statement = hiveDruidDataSource.getConnection().createStatement();String sql = "describe " + tableName;log.info("Running" + sql);ResultSet resultSet = statement.executeQuery(sql);while (resultSet.next()) {result.add(resultSet.getString(1));}return result;} catch (SQLException throwables) {log.error(throwables.getMessage());}return Collections.emptyList();}@Overridepublic List<String> selectFromTable(String tableName) {if (StringUtils.isEmpty(tableName)){return Collections.emptyList();}List<String> result = new ArrayList<>();try {Statement statement = hiveDruidDataSource.getConnection().createStatement();String sql = "select * from " + tableName;log.info("Running" + sql);ResultSet resultSet = statement.executeQuery(sql);int columnCount = resultSet.getMetaData().getColumnCount();String str = null;while (resultSet.next()) {str = "";for (int i = 1; i < columnCount; i++) {str += resultSet.getString(i) + " ";}str += resultSet.getString(columnCount);log.info(str);result.add(str);}return result;} catch (SQLException throwables) {log.error(throwables.getMessage());}return Collections.emptyList();}}

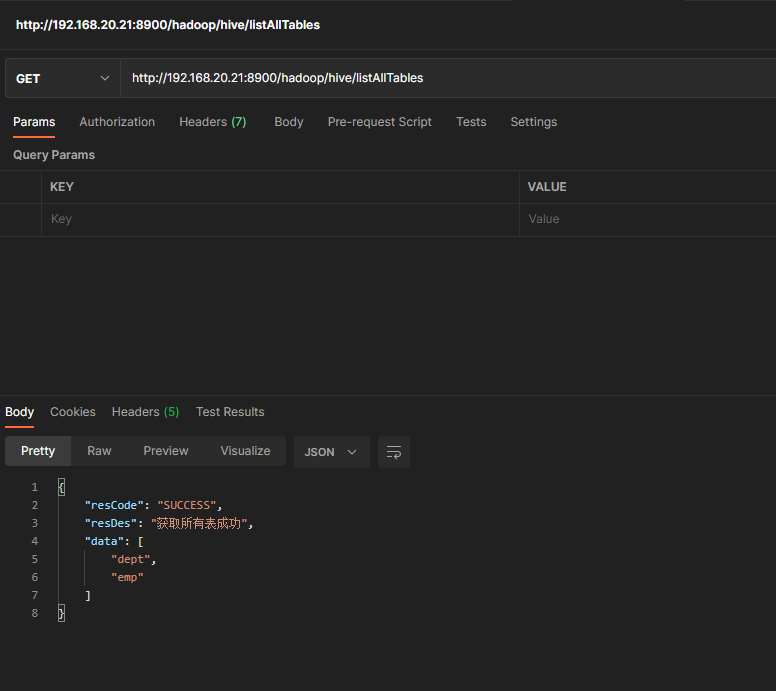

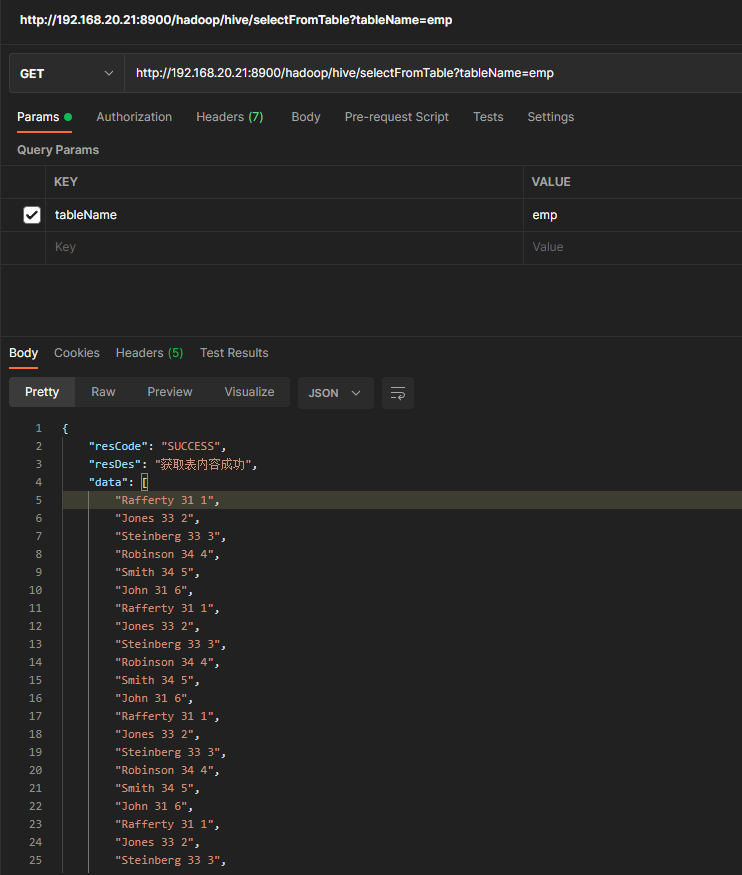

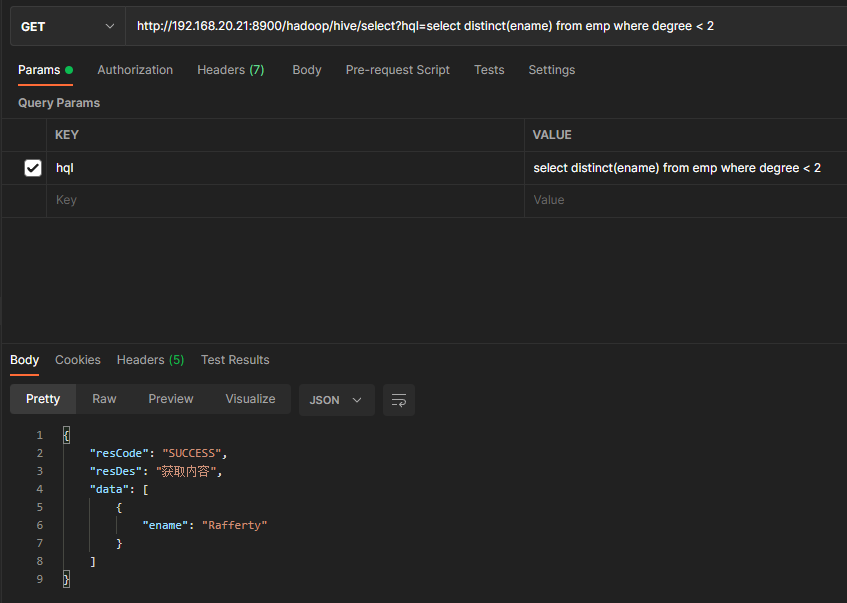

3.简单测试

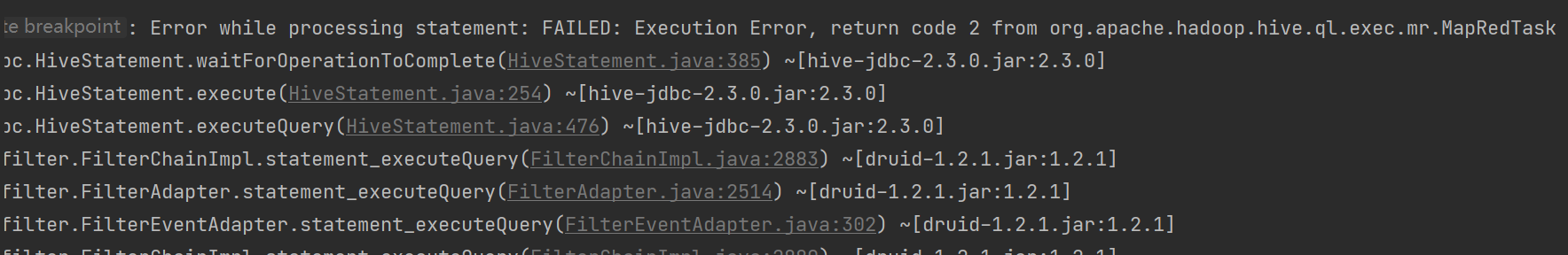

4.遇到的问题

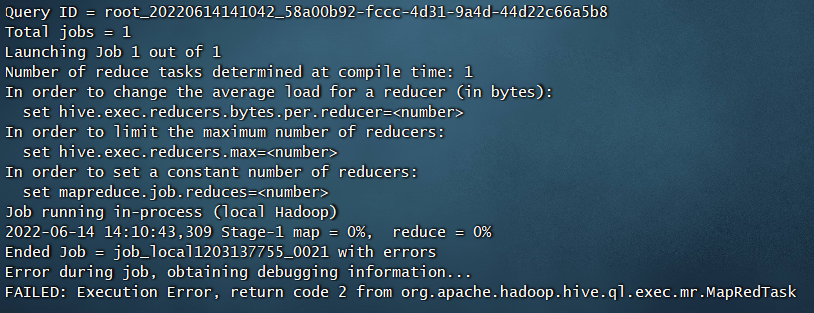

从控制台日志看不出来,我们到我们自定义的输出路径查看一下

与控制台报错大差不差,还是看不懂,只能看出来在job阶段出错了,我们再查看一下hive的日志

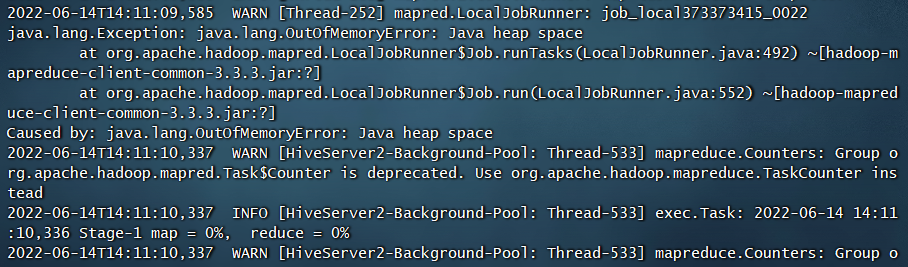

cat /tmp/root/hive.log

……内存溢出,行吧

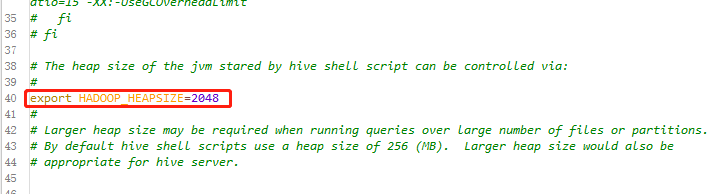

解决方法:在Hive的配置文件hive-env.sh里面配置heap大小

然后重新启动Hiveserver2即可

这里有一个遗留问题,在测试时发现,无论利用beeline还是原生jdbc,不需要输入用户名与密码也可链接上,目前还没找到什么原因,有懂的人可以分享一下为什么。

——通过在网上找了一些资料明白了一些,Hive安装的时候默认是没有用户名和密码的,如果想启用需要自己写代码自定义验证,然后给配置文件中加入用户名密码的信息

参考链接:

https://blog.csdn.net/Winter_chen001/article/details/110449266

https://blog.csdn.net/yamaxifeng_132/article/details/119869080