1 简介

VGGNet是2015年在ICLR会议中,公开的神经网络模型,这个模型在2014年imagenet比赛中获得了定位的第一名和分类的第二名的好成绩。

2 基本方法

Google官方文档介绍

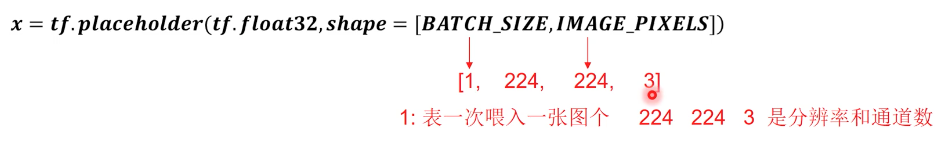

(1)tf.placeholder用于传入真实训练样本/测试、真实特征、待处理特征,仅占位,不必给初值,用sess.run的feed_dict参数以字典形式喂给x:

images = tf.placeholder(tf.float32, shape =[BATCH_SIZE, IMAGE_PIXELS])

(2)np.load /np.save将数组以二进制格式读出或写入磁盘,扩展名为.npy

np.save(“名.npy”,某数组) 某变量 = np.load(“名.npy”,encoding =””).item()# encoding可不写,有三个选项latin1 ASCII bytes 默认是ASCII

(3).item()遍历(键值对)

data_dict = np.load(vgg16.npy,encoding=’latin1’).item()#读取vgg16.npy文件,遍历其内键值对,导出模型参数赋给data_dict

(4)tf.shape(a)返回a的维度,a可以为tensor,list ,array

(5)tf.nn.bias_add(乘加和,bias)#把bias加到乘加和上

(6)tf.reshape(tensor,[n行,m列])或tf.reshape(tensor,[-1,m列])-1表示行随着m列自动计算

(7)np.argsort(列表)#对列表从小到大排序,返回索引值

(8)os.getcwd() # 返回当前工作目录

(9)os.path.join( , ,…) # 拼出整个路径,可引导到特定文件

vv16_path = os.path.join(os.getcwd(),”vgg16.npy”)

(10)tf.split(切谁,怎么切,在哪个维度切)

#value 是一个【5 30】的张量split0,split1,split2 = tf.split(value,[4,15,11],1)把value在第一个维度分为4 15 11三份tf.shape(split0) ==>[5,4]tf.shape(split1) ==>[5,15]tf.shape(split2) ==>[5,11]#把value平均切为三份split0,split1,split2 = tf.split(value, num_or_size_splits = 3,axis = 1)

(11)tf.conda(值,在哪个维),实现粘贴

t1 = [[1,2,3],[4,5,6]]t2 = [[7,8,9],[10,11,12]]#按照第0个维度粘tf.concat([t1,t2],0) ==>[[1,2,3],[4,5,6][7,8,9],[10,11,12]]#按照第1个维度粘贴tf.concat([t1,t2],1) ==>[[1,2,3,7,8,9],[4,5,6,10,11,12]]...

(12)fig = plt.figure(‘图名字’)可视化图片

img = io.imread(图片路径)ax = fig.add_subplot(数 数 数)#分别是包含几行,包含∏列,当前是第几个ax.bar(bar的个数,bar的值,每个bar的名字,bar宽,bard色)ax.set_ylabel("")ax.set_title("")ax.text(文字x坐标,文字y坐标,文字内容,ha ='center',va ='bottom',fontsize = 7)

(13)ax = imshow(图)画子图

3 实现

app.py文件

是应用程序,实现图像识别

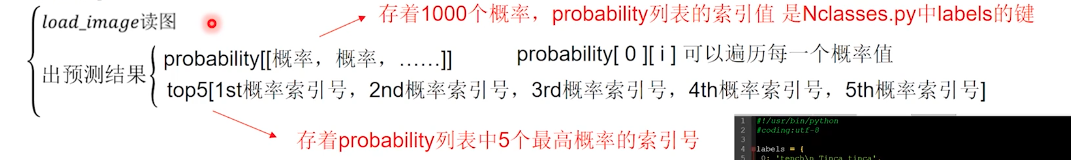

#coding:utf-8import numpy as npimport tensorflow as tf#引入绘图模块import matplotlib.pyplot as plt#引用自定义模块import vgg16import utilsfrom Nclasses import labelstestNum = input("input the number of test pictures:")for i in range(testNum):img_path = raw_input('Input the path and image name:')#对待测试图像出预处理操作img_ready = utils.load_image(img_path)#定义画图窗口,并指定窗口名称fig=plt.figure(u"Top-5 预测结果")with tf.Session() as sess:#定义一个维度为[1, 224, 224, 3]的占位符images = tf.placeholder(tf.float32, [1, 224, 224, 3])#实例化出vggvgg = vgg16.Vgg16()#前向传播过程,调用成员函数,并传入待测试图像vgg.forward(images)#将一个batch数据喂入网络,得到网络的预测输出probability = sess.run(vgg.prob, feed_dict={images:img_ready})#得到预测概率最大的五个索引值top5 = np.argsort(probability[0])[-1:-6:-1]print "top5:",top5#定义两个list-对应概率值和实际标签values = []bar_label = []#枚举上面取出的五个索引值for n, i in enumerate(top5):print "n:",nprint "i:",i#将索引值对应的预测概率值取出并放入valuevalues.append(probability[0][i])#将索引值对应的际标签取出并放入bar_labelbar_label.append(labels[i])print i, ":", labels[i], "----", utils.percent(probability[0][i])#将画布分为一行一列,并把下图放入其中ax = fig.add_subplot(111)#绘制柱状图ax.bar(range(len(values)), values, tick_label=bar_label, width=0.5, fc='g')#设置横轴标签ax.set_ylabel(u'probabilityit')#添加标题ax.set_title(u'Top-5')for a,b in zip(range(len(values)), values):#显示预测概率值ax.text(a, b+0.0005, utils.percent(b), ha='center', va = 'bottom', fontsize=7)#显示图像plt.show()

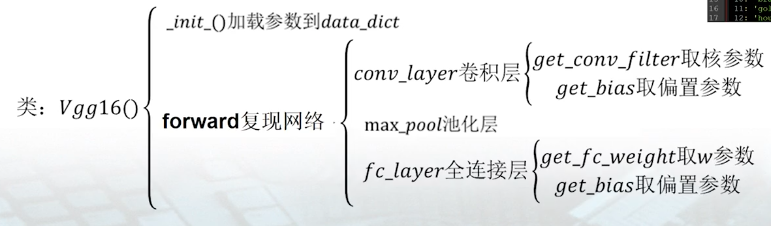

vgg16.py文件

读取模型参数,搭建模型

#tensorflow学习笔记(北京大学) vgg16.py 完全解析#QQ群:476842922(欢迎加群讨论学习#!/usr/bin/python#coding:utf-8import inspectimport osimport numpy as npimport tensorflow as tfimport timeimport matplotlib.pyplot as plt#样本RGB的平均值VGG_MEAN = [103.939, 116.779, 123.68]class Vgg16():def __init__(self, vgg16_path=None):if vgg16_path is None:#返回当前工作目录vgg16_path = os.path.join(os.getcwd(), "vgg16.npy")#遍历其内键值对,导入模型参数self.data_dict = np.load(vgg16_path, encoding='latin1').item()def forward(self, images):print("build model started")#获取前向传播开始时间start_time = time.time()#逐个像素乘以255rgb_scaled = images * 255.0#从GRB转换彩色通道到BRGred, green, blue = tf.split(rgb_scaled,3,3)#减去每个通道的像素平均值,这种操作可以移除图像的平均亮度值#该方法常用在灰度图像上bgr = tf.concat([blue - VGG_MEAN[0],green - VGG_MEAN[1],red - VGG_MEAN[2]],3)#构建VGG的16层网络(包含5段卷积,3层全连接),并逐层根据命名空间读取网络参数#第一段卷积,含有两个卷积层,后面接最大池化层,用来缩小图片尺寸self.conv1_1 = self.conv_layer(bgr, "conv1_1")#传入命名空间的name,来获取该层的卷积核和偏置,并做卷积运算,最后返回经过激活函数后的值self.conv1_2 = self.conv_layer(self.conv1_1, "conv1_2")#根据传入的pooling名字对该层做相应的池化操作self.pool1 = self.max_pool_2x2(self.conv1_2, "pool1")#第二段卷积,包含两个卷积层,一个最大池化层self.conv2_1 = self.conv_layer(self.pool1, "conv2_1")self.conv2_2 = self.conv_layer(self.conv2_1, "conv2_2")self.pool2 = self.max_pool_2x2(self.conv2_2, "pool2")#第三段卷积,包含三个卷积层,一个最大池化层self.conv3_1 = self.conv_layer(self.pool2, "conv3_1")self.conv3_2 = self.conv_layer(self.conv3_1, "conv3_2")self.conv3_3 = self.conv_layer(self.conv3_2, "conv3_3")self.pool3 = self.max_pool_2x2(self.conv3_3, "pool3")#第四段卷积,包含三个卷积层,一个最大池化层self.conv4_1 = self.conv_layer(self.pool3, "conv4_1")self.conv4_2 = self.conv_layer(self.conv4_1, "conv4_2")self.conv4_3 = self.conv_layer(self.conv4_2, "conv4_3")self.pool4 = self.max_pool_2x2(self.conv4_3, "pool4")#第五段卷积,包含三个卷积层,一个最大池化层self.conv5_1 = self.conv_layer(self.pool4, "conv5_1")self.conv5_2 = self.conv_layer(self.conv5_1, "conv5_2")self.conv5_3 = self.conv_layer(self.conv5_2, "conv5_3")self.pool5 = self.max_pool_2x2(self.conv5_3, "pool5")#第六层全连接#根据命名空间name做加权求和运算self.fc6 = self.fc_layer(self.pool5, "fc6")#经过relu激活函数self.relu6 = tf.nn.relu(self.fc6)#第七层全连接self.fc7 = self.fc_layer(self.relu6, "fc7")self.relu7 = tf.nn.relu(self.fc7)#第八层全连接self.fc8 = self.fc_layer(self.relu7, "fc8")self.prob = tf.nn.softmax(self.fc8, name="prob")#得到全向传播时间end_time = time.time()print(("time consuming: %f" % (end_time-start_time)))#清空本次读取到的模型参数字典self.data_dict = None#定义卷积运算def conv_layer(self, x, name):#根据命名空间name找到对应卷积层的网络参数with tf.variable_scope(name):#读到该层的卷积核w = self.get_conv_filter(name)#卷积运算conv = tf.nn.conv2d(x, w, [1, 1, 1, 1], padding='SAME')#读到偏置项conv_biases = self.get_bias(name)#加上偏置,并做激活计算result = tf.nn.relu(tf.nn.bias_add(conv, conv_biases))return result#定义获取卷积核的参数def get_conv_filter(self, name):#根据命名空间从参数字典中获取对应的卷积核return tf.constant(self.data_dict[name][0], name="filter")#定义获取偏置项的参数def get_bias(self, name):#根据命名空间从参数字典中获取对应的偏置项return tf.constant(self.data_dict[name][1], name="biases")#定义最大池化操作def max_pool_2x2(self, x, name):return tf.nn.max_pool(x, ksize=[1, 2, 2, 1], strides=[1, 2, 2, 1], padding='SAME', name=name)#定义全连接层的全向传播操作def fc_layer(self, x, name):#根据命名空间name做全连接层的计算with tf.variable_scope(name):#获取该层的维度信息列表shape = x.get_shape().as_list()dim = 1for i in shape[1:]:#将每层的维度相乘dim *= i#改变特征图的形状,也就是将得到的多维特征做拉伸操作,只在进入第六层全连接层做该操作x = tf.reshape(x, [-1, dim])#读到权重值w = self.get_fc_weight(name)#读到偏置项值b = self.get_bias(name)#对该层输入做加权求和,再加上偏置result = tf.nn.bias_add(tf.matmul(x, w), b)return result#定义获取权重的函数def get_fc_weight(self, name):#根据命名空间name从参数字典中获取对应1的权重return tf.constant(self.data_dict[name][0], name="weights")

utils.py文件

读入图片,概率显示

#!/usr/bin/python#coding:utf-8from skimage import io, transformimport numpy as npimport matplotlib.pyplot as pltimport tensorflow as tffrom pylab import mplmpl.rcParams['font.sans-serif']=['SimHei'] # 正常显示中文标签mpl.rcParams['axes.unicode_minus']=False # 正常显示正负号def load_image(path):fig = plt.figure("Centre and Resize")#传入读入图片的参数路径img = io.imread(path)#将像素归一化处理到[0,1]img = img / 255.0#将该画布分为一行三列,把下面的图像放在画布的第一个位置ax0 = fig.add_subplot(131)#添加子标签ax0.set_xlabel(u'Original Picture')#添加展示该图像ax0.imshow(img)#找到该图像的最短边short_edge = min(img.shape[:2])#把图像的w和h分别减去最短边,并求平均y = (img.shape[0] - short_edge) / 2x = (img.shape[1] - short_edge) / 2#取出切分过的中心图像crop_img = img[y:y+short_edge, x:x+short_edge]#把下面的图像放在画布的第二个位置ax1 = fig.add_subplot(132)#添加子标签ax1.set_xlabel(u"Centre Picture")#添加展示该图像ax1.imshow(crop_img)#resize成固定的imagesizere_img = transform.resize(crop_img, (224, 224))#把下面的图像放在画布的第三个位置ax2 = fig.add_subplot(133)ax2.set_xlabel(u"Resize Picture")ax2.imshow(re_img)#转换为需要的输入形状img_ready = re_img.reshape((1, 224, 224, 3))return img_ready#定义百分比转换函数def percent(value):return '%.2f%%' % (value * 100)

Nclasses.py文件

含label字典

源码下载

vgg16.npy文件

包含了神经网络的全部参数

源码下载