分词

分析文本并生成 token。

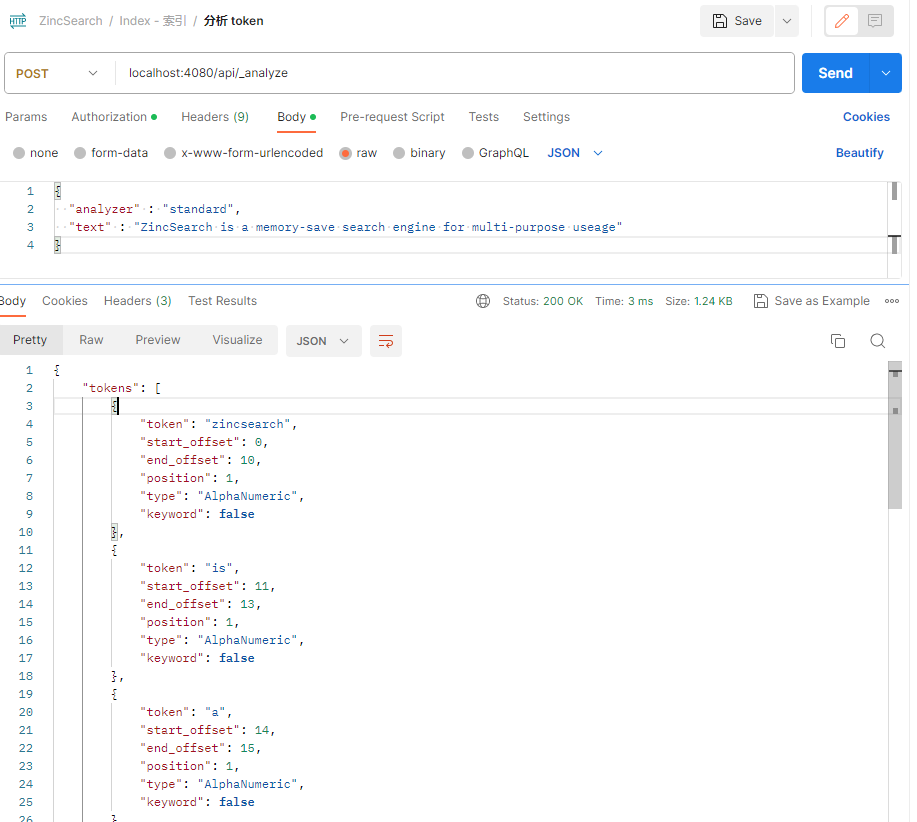

请求示例

POST /api/_analyze

请求参数:

{"analyzer" : "standard","text" : "50 first dates"}

响应示例

{"tokens": [{"end_offset": 2,"keyword": false,"position": 1,"start_offset": 0,"token": "50","type": "Numeric"},{"end_offset": 8,"keyword": false,"position": 1,"start_offset": 3,"token": "first","type": "AlphaNumeric"},{"end_offset": 14,"keyword": false,"position": 1,"start_offset": 9,"token": "dates","type": "AlphaNumeric"}]}

使用特定的分析器

{"analyzer" : "standard","text" : "50 first dates"}

使用特定的标记符号生成器 - tokenizer

{"tokenizer" : "standard","text" : "50 first dates"}

使用特定的标记符号生成器和 filter

{"tokenizer" : "standard","char_filter" : ["html"],"token_filter" : ["camel_case"],"text" : "50 first dates"}

示例