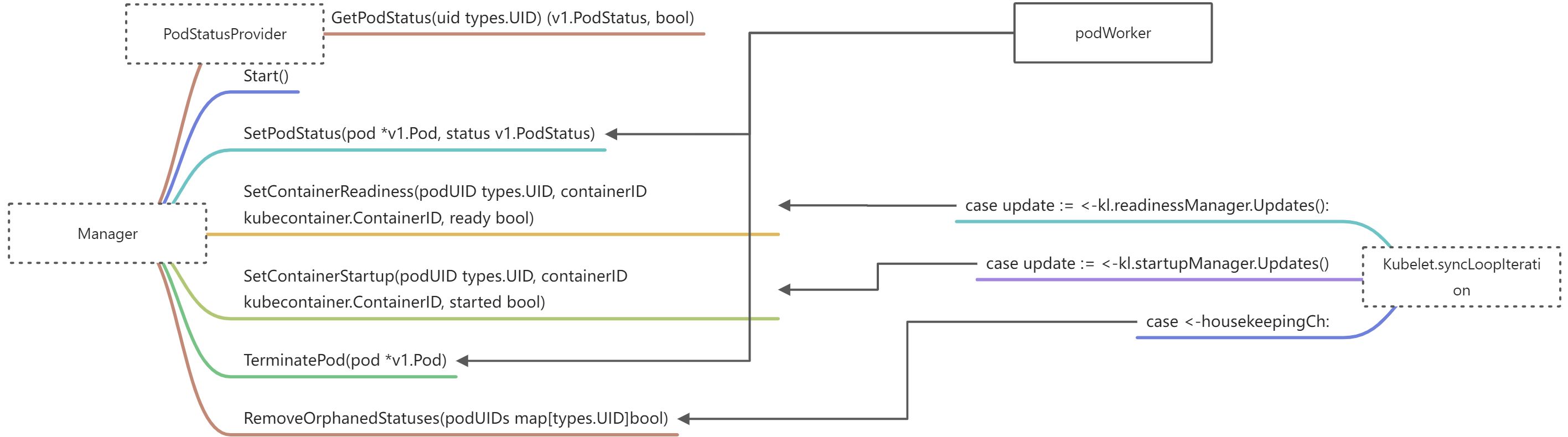

感知Pod状态变化和探测状态改变的流程

004.kubelet容器状态同步管理

代码路径: /pkg /kubelet /status /status_manager.go

接口定义

PodStatusProvider: 提供获取 PodStatus 的方法

Manager:提供最新 Pod 状态的存储载体,也负责同步更新到 API Server

// PodStatusProvider knows how to provide status for a pod. It's intended to be used by other components// that need to introspect status.type PodStatusProvider interface {// GetPodStatus returns the cached status for the provided pod UID, as well as whether it// was a cache hit.GetPodStatus(uid types.UID) (v1.PodStatus, bool)}

// Manager is the Source of truth for kubelet pod status, and should be kept up-to-date with// the latest v1.PodStatus. It also syncs updates back to the API server.type Manager interface {PodStatusProvider// Start the API server status sync loop.Start()// SetPodStatus caches updates the cached status for the given pod, and triggers a status update.SetPodStatus(pod *v1.Pod, status v1.PodStatus)// SetContainerReadiness updates the cached container status with the given readiness, and// triggers a status update.SetContainerReadiness(podUID types.UID, containerID kubecontainer.ContainerID, ready bool)// SetContainerStartup updates the cached container status with the given startup, and// triggers a status update.SetContainerStartup(podUID types.UID, containerID kubecontainer.ContainerID, started bool)// TerminatePod resets the container status for the provided pod to terminated and triggers// a status update.TerminatePod(pod *v1.Pod)// RemoveOrphanedStatuses scans the status cache and removes any entries for pods not included in// the provided podUIDs.RemoveOrphanedStatuses(podUIDs map[types.UID]bool)}

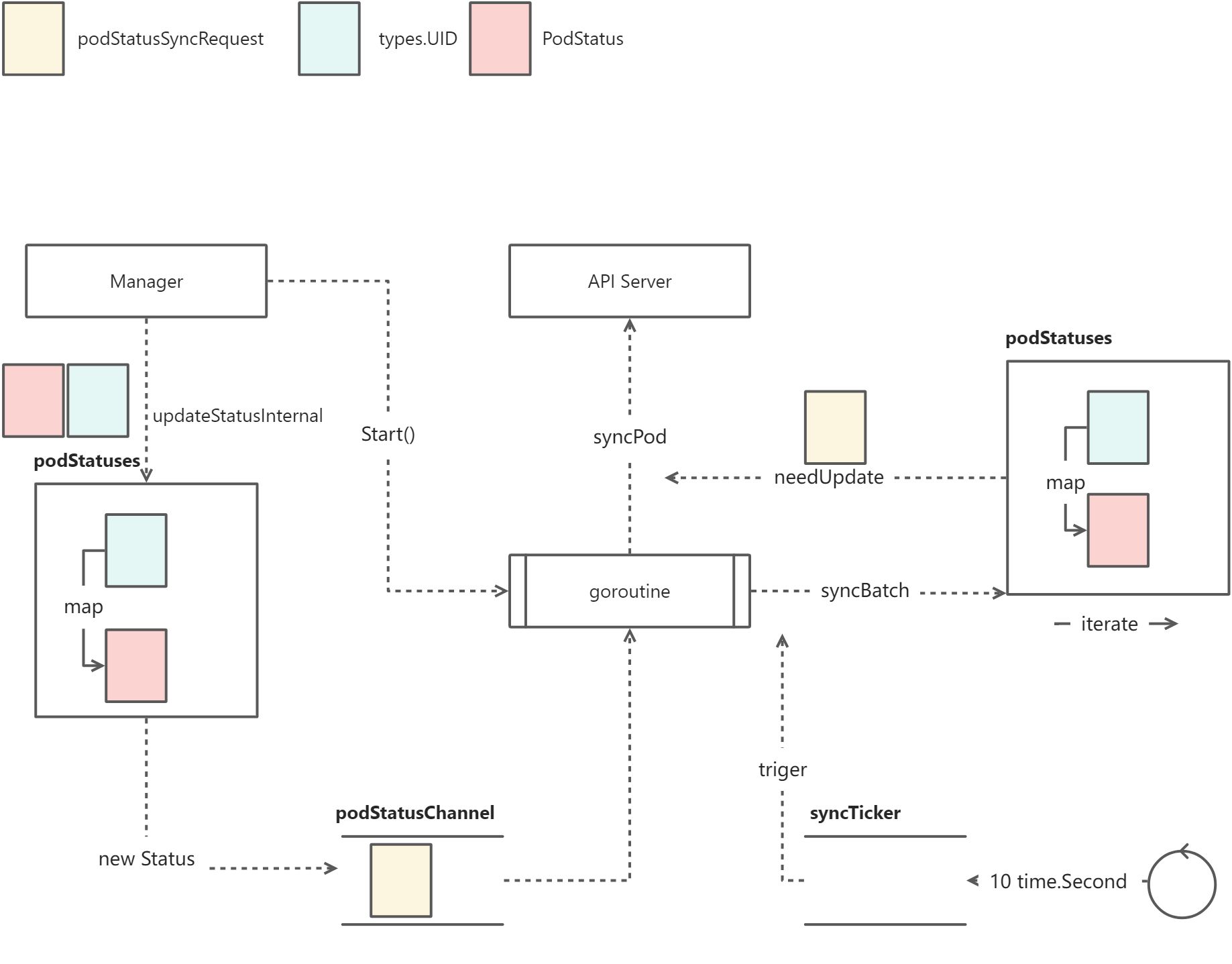

Status Manager 与 Kubelet 交互图

需要注意的是,最后都会流转到 updateStatusInternal 处理,所以我们主要看的核心逻辑不言而喻

结构体

PodStatus:PodStatus 表示有关 pod 状态的信息。状态可能跟踪系统的实际状态,尤其是当承载pod的节点无法联系控制平面时。(注: pod 状态信息字段较多,跳转查看:)

// PodStatus represents information about the status of a pod. Status may trail the actual// state of a system, especially if the node that hosts the pod cannot contact the control// plane.type PodStatus struct {// The phase of a Pod is a simple, high-level summary of where the Pod is in its lifecycle.// The conditions array, the reason and message fields, and the individual container status// arrays contain more detail about the pod's status.// There are five possible phase values://// Pending: The pod has been accepted by the Kubernetes system, but one or more of the// container images has not been created. This includes time before being scheduled as// well as time spent downloading images over the network, which could take a while.// Running: The pod has been bound to a node, and all of the containers have been created.// At least one container is still running, or is in the process of starting or restarting.// Succeeded: All containers in the pod have terminated in success, and will not be restarted.// Failed: All containers in the pod have terminated, and at least one container has// terminated in failure. The container either exited with non-zero status or was terminated// by the system.// Unknown: For some reason the state of the pod could not be obtained, typically due to an// error in communicating with the host of the pod.//// More info: https://kubernetes.io/docs/concepts/workloads/pods/pod-lifecycle#pod-phase// +optionalPhase PodPhase `json:"phase,omitempty" protobuf:"bytes,1,opt,name=phase,casttype=PodPhase"`// Current service state of pod.// More info: https://kubernetes.io/docs/concepts/workloads/pods/pod-lifecycle#pod-conditions// +optional// +patchMergeKey=type// +patchStrategy=mergeConditions []PodCondition `json:"conditions,omitempty" patchStrategy:"merge" patchMergeKey:"type" protobuf:"bytes,2,rep,name=conditions"`// A human readable message indicating details about why the pod is in this condition.// +optionalMessage string `json:"message,omitempty" protobuf:"bytes,3,opt,name=message"`// A brief CamelCase message indicating details about why the pod is in this state.// e.g. 'Evicted'// +optionalReason string `json:"reason,omitempty" protobuf:"bytes,4,opt,name=reason"`// nominatedNodeName is set only when this pod preempts other pods on the node, but it cannot be// scheduled right away as preemption victims receive their graceful termination periods.// This field does not guarantee that the pod will be scheduled on this node. Scheduler may decide// to place the pod elsewhere if other nodes become available sooner. Scheduler may also decide to// give the resources on this node to a higher priority pod that is created after preemption.// As a result, this field may be different than PodSpec.nodeName when the pod is// scheduled.// +optionalNominatedNodeName string `json:"nominatedNodeName,omitempty" protobuf:"bytes,11,opt,name=nominatedNodeName"`// IP address of the host to which the pod is assigned. Empty if not yet scheduled.// +optionalHostIP string `json:"hostIP,omitempty" protobuf:"bytes,5,opt,name=hostIP"`// IP address allocated to the pod. Routable at least within the cluster.// Empty if not yet allocated.// +optionalPodIP string `json:"podIP,omitempty" protobuf:"bytes,6,opt,name=podIP"`// podIPs holds the IP addresses allocated to the pod. If this field is specified, the 0th entry must// match the podIP field. Pods may be allocated at most 1 value for each of IPv4 and IPv6. This list// is empty if no IPs have been allocated yet.// +optional// +patchStrategy=merge// +patchMergeKey=ipPodIPs []PodIP `json:"podIPs,omitempty" protobuf:"bytes,12,rep,name=podIPs" patchStrategy:"merge" patchMergeKey:"ip"`// RFC 3339 date and time at which the object was acknowledged by the Kubelet.// This is before the Kubelet pulled the container image(s) for the pod.// +optionalStartTime *metav1.Time `json:"startTime,omitempty" protobuf:"bytes,7,opt,name=startTime"`// The list has one entry per init container in the manifest. The most recent successful// init container will have ready = true, the most recently started container will have// startTime set.// More info: https://kubernetes.io/docs/concepts/workloads/pods/pod-lifecycle#pod-and-container-statusInitContainerStatuses []ContainerStatus `json:"initContainerStatuses,omitempty" protobuf:"bytes,10,rep,name=initContainerStatuses"`// The list has one entry per container in the manifest.// More info: https://kubernetes.io/docs/concepts/workloads/pods/pod-lifecycle#pod-and-container-status// +optionalContainerStatuses []ContainerStatus `json:"containerStatuses,omitempty" protobuf:"bytes,8,rep,name=containerStatuses"`// The Quality of Service (QOS) classification assigned to the pod based on resource requirements// See PodQOSClass type for available QOS classes// More info: https://git.k8s.io/community/contributors/design-proposals/node/resource-qos.md// +optionalQOSClass PodQOSClass `json:"qosClass,omitempty" protobuf:"bytes,9,rep,name=qosClass"`// Status for any ephemeral containers that have run in this pod.// This field is beta-level and available on clusters that haven't disabled the EphemeralContainers feature gate.// +optionalEphemeralContainerStatuses []ContainerStatus `json:"ephemeralContainerStatuses,omitempty" protobuf:"bytes,13,rep,name=ephemeralContainerStatuses"`}

versionedPodStatus:包装了 v1.PodStatus,额外提供了版本信息,保证过时的版本不会发送到 API Server 去

// A wrapper around v1.PodStatus that includes a version to enforce that stale pod statuses are// not sent to the API server.type versionedPodStatus struct {status v1.PodStatus// Monotonically increasing version number (per pod).version uint64// Pod name & namespace, for sending updates to API server.podName stringpodNamespace string}

podStstusSyncRequest:同步 pod 请求的包装结构体

type podStatusSyncRequest struct {podUID types.UIDstatus versionedPodStatus}

manager:线程安全的实例,会同步 Pod 的状态到 API Server,只在新状态变化的时候执行写操作

// Updates pod statuses in apiserver. Writes only when new status has changed.// All methods are thread-safe.type manager struct {kubeClient clientset.InterfacepodManager kubepod.Manager// Map from pod UID to sync status of the corresponding pod.podStatuses map[types.UID]versionedPodStatuspodStatusesLock sync.RWMutexpodStatusChannel chan podStatusSyncRequest// Map from (mirror) pod UID to latest status version successfully sent to the API server.// apiStatusVersions must only be accessed from the sync thread.apiStatusVersions map[kubetypes.MirrorPodUID]uint64podDeletionSafety PodDeletionSafetyProvider}

核心逻辑

练习

PR Need:needsReconcile 需要简化逻辑

Help Wanted

描述:这里不得不传递 Static Pod 的 uid,因为 pod manager 只支持通过 static pod 获取 mirror pod。

读者可以开始一个 PR 练习

// needsReconcile compares the given status with the status in the pod manager (which// in fact comes from apiserver), returns whether the status needs to be reconciled with// the apiserver. Now when pod status is inconsistent between apiserver and kubelet,// kubelet should forcibly send an update to reconcile the inconsistence, because kubelet// should be the source of truth of pod status.// NOTE(random-liu): It's simpler to pass in mirror pod uid and get mirror pod by uid, but// now the pod manager only supports getting mirror pod by static pod, so we have to pass// static pod uid here.// TODO(random-liu): Simplify the logic when mirror pod manager is added.func (m *manager) needsReconcile(uid types.UID, status v1.PodStatus) bool {// The pod could be a static pod, so we should translate first.pod, ok := m.podManager.GetPodByUID(uid)if !ok {klog.V(4).InfoS("Pod has been deleted, no need to reconcile", "podUID", string(uid))return false}// If the pod is a static pod, we should check its mirror pod, because only status in mirror pod is meaningful to us.if kubetypes.IsStaticPod(pod) {mirrorPod, ok := m.podManager.GetMirrorPodByPod(pod)if !ok {klog.V(4).InfoS("Static pod has no corresponding mirror pod, no need to reconcile", "pod", klog.KObj(pod))return false}pod = mirrorPod}podStatus := pod.Status.DeepCopy()normalizeStatus(pod, podStatus)if isPodStatusByKubeletEqual(podStatus, &status) {// If the status from the source is the same with the cached status,// reconcile is not needed. Just return.return false}klog.V(3).InfoS("Pod status is inconsistent with cached status for pod, a reconciliation should be triggered","pod", klog.KObj(pod),"statusDiff", diff.ObjectDiff(podStatus, &status))return true}